Principle of Operation – Dynamic Image Analysis (Flow Imaging Microscopy)

Background Information

Investigators use particle characterization methods to better understand key properties that can impact an end product or the manufacturing process. As development progresses, particle characterization methods are typically transferred to the manufacturing process, where the control of various parameters is measured to ensure the manufacturability and efficacy of the final product.

For many years, particle size has been a vital characterization method. Most of the established techniques for particle size characterization are indirect measurement techniques that render particle size information assuming all the measured particles are round. Most particles in the industry are not round, leaving significant doubt on the ability of size-only techniques to offer enough characterization information.

Irregular particle shape can significantly impact how particles interact with each other, how they flow, and how they compact, ultimately affecting the efficacy of products. Because of this, many years ago, scientists embraced and elevated the characterization of particles based on shape and size by using direct measurement techniques such as microscopes. Although slow and tedious, microscopy allows users to obtain qualitative information about their raw materials particles.

Image Analysis as it applies to particle shape was first explored in 1963, the “Krumbein Scale” was presented for geologists who wanted a standardized way to measure particle roundness and sphericity. This scale was visual with sample images on a sheet of paper to compare particles and was subjective. However, this tells us that for many decades, the need to understand particle shape has been just about as critical as particle size. As time went on, scientists were able to use microscopes to view small sample populations to better understand the shape of their particles.

It wasn’t until the early 1990s when improved machine vision cameras and higher performing computers were around to start analyzing the shape of particles in a more standardized way with a much higher sampling of particles. Image Analysis, whether the particles are static or dynamically moving, involves very fast image processing.

How does dynamic image analysis work? The Basics

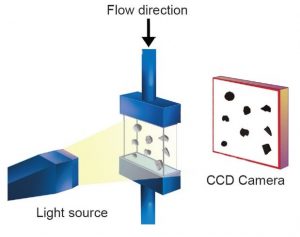

Because this is a high-speed technique and designed to measure tens of thousands of particles in minutes or seconds, particles are suspended in fluid to ensure they are disperse and homogeneous. The suspended particles flow through a flow cell. An illumination source on one side of the flow cell shines light through the cell to a lens and digital camera on the other side. The camera records dark silhouettes of the particles and sends the images to a computer in grayscale format.

The Principle of Operation

-

Sample Introduction – Wet or dry particles are introduced into the measurement system in a controlled, steady flow.

-

Illumination – A bright, high-intensity light source (LED or halogen) provides optimal contrast for edge detection.

-

Image Capture – A high-speed camera captures sharp, blur-free images of each particle in motion.

-

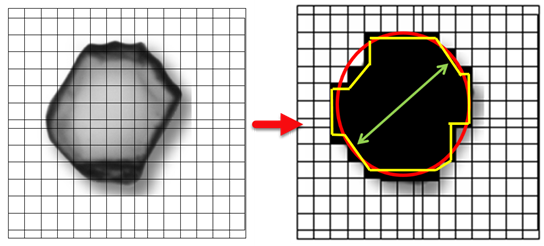

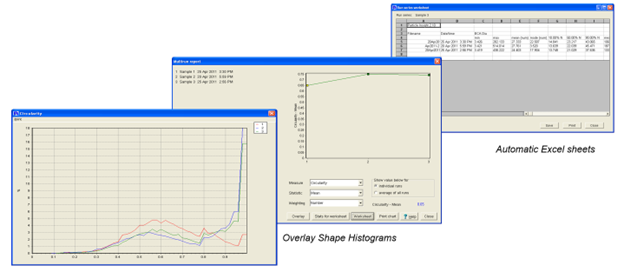

Automated Analysis – Advanced software measures each particle’s size and shape, while also storing its image for later review. The analysis software converts each image to a binary format. Various rejection and thresholding parameters are applied and the resulting binary pixelized image is used to calculate the 30+ shape parameters for each particle. At the same time, each particle is saved in a thumbnail format as objective evidence of all the measured particles.

More Detail

Because dynamic image analysis uses camera technology to capture images, it is important to understand a few fundamental facts that deal with image taking. The first is the Field-of-View.

Field of View (FOV) is the area (x, y) of the imaging zone where particles will be measured. The FOV size is dependent on lens magnification. The higher the magnification of the lens, the smaller the field of view will be. This would be the equivalent of a person zooming in with a camera to take a picture of something very small. By zooming in, or increasing the magnification, the smaller particles will look bigger on the screen. Ensuring adequate magnification is very important because the larger the particles are in the field of view, the more pixels that are being applied to the particle edges. Because shape is determined using the pixels obscured by particles, this would allow more pixels to be used which means the greater resolution an accuracy of the results. The opposite is also true in that as you decrease magnification, or zoom out, your field of view will increase capturing more area.

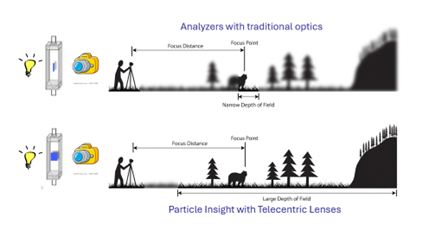

Depth of field is also an important concept to understand when it comes to dynamic image analysis. Again, like basic picture taking, the depth of field relates to the area closest and furthest from the camera where all objects, or particles in our case, are in focus. Dynamic image analysis will always reject particles that are out of focus.

The depth of field is controlled by the lens aperture diameter, typically specified as the f-stop number. Reducing the aperture diameter (increasing the f-stop) increases the depth of field because the light travelling at shallower angles pass through the aperture therefore only light rays with shorter angles reach the image plane. With respect to dynamic image analysis, what's important to remember is that the manufacturer of the equipment will always try to ensure the greatest depth of field.

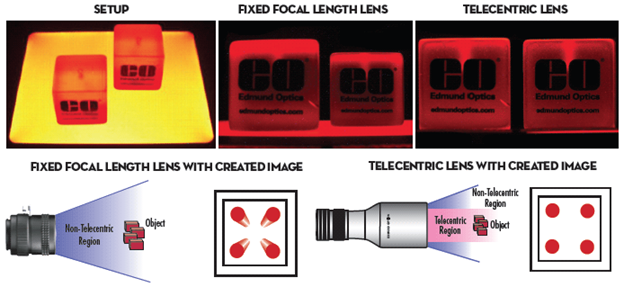

One last optical issue to understand is perspective error and correcting for it. Perspective error occurs when a broader depth of field is allowing particles closer vs further away to “look” smaller due to their distance from the imaging source. As can be seen from the image below (complements of Edmund Optics), one object looks smaller because it is further away. Notice they are both within the depth of field (both in focus). In cases like this, many dynamic image analysis instruments incorporate telecentric optics that correct for this error. Not correcting for this error will result in erroneous data as the particles in the field of view and depth of field but further away can be reported as smaller than exact same particles closer to the imaging source.

What do we get?

Now that you know how it works, what does this mean? What does all this give you as an end user?

Remember that the most popular method for particle characterization is particle size. Also, the most common technique for particle size will report results assuming the particles are all round. Because dynamic image analysis is a number-based technique, it can give much more information. The first being a volume and number weighted particle size distribution.

Number and Volume weighting:

Dynamic imaging, being a counting based measurement, will also enable the reporting of statistical size data as a number weighted and volume weighted distribution. Number weighted distributions are very important to enable visualizing fine particles present in a sample. Volume weighted distributions are also very important and enable the visualization of the presence of small amounts of large particles such as agglomerates. Both are always calculated and reported. The ability to also show results as a sieve correlation enables the ability to compare current sieving results to automated dynamic imaging to facilitate transferring methods.

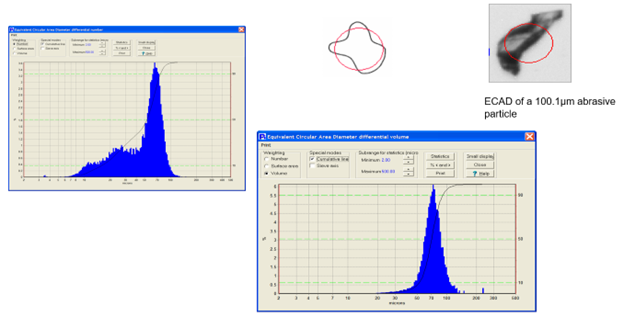

Above we show both the Number-weighted as well as the Volume-weighted particle size distribution results of an abrasive particle. This shape model is a size model that can estimate particles as if they were round. This shape model is one that is typically used by customers to compare dynamic image analysis results to other size only techniques. As you can see here both histograms are very different. The number weighted histogram shows all of the fine particles in the sample. Depending on the application, this could be very important. For example, if this was an application where filtration was involved, a high number of fine particles can plug up a filter very quickly requiring a user monitor very closely. The volume weighted histogram can also be very important if the presence of large particles or agglomerates is of concern.

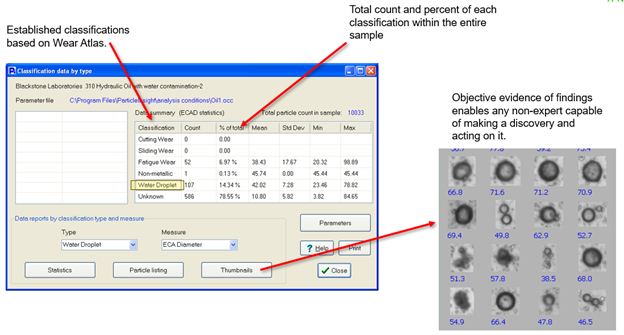

Counting and Concentration:

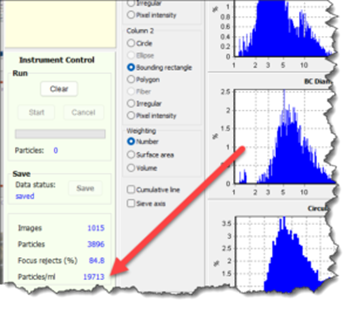

Because dynamic image analysis measures each individual particle, particle counting and concentration measurements are given. Concentration values are displayed in real-time as particles per milliliter. Total count is given once the analysis is done and the count can be for all particles or one class or type of particle. This is a critical measurement to have available when working with samples that have rare events or when knowing what concentration levels are present for any population or sub-population of samples is needed.

Counting instruments, such as coulter counters and dynamic imaging instruments will also incorporate dilution factors that will take into account and adjust for any pre-dilution of sample.

Viewing questionable statistics:

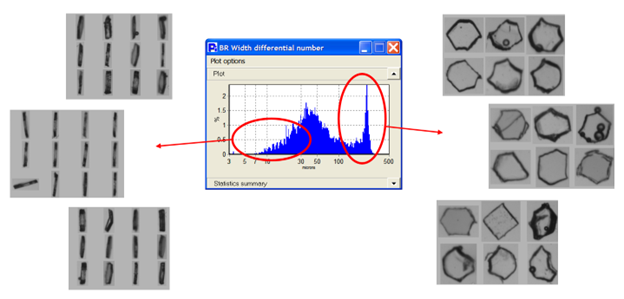

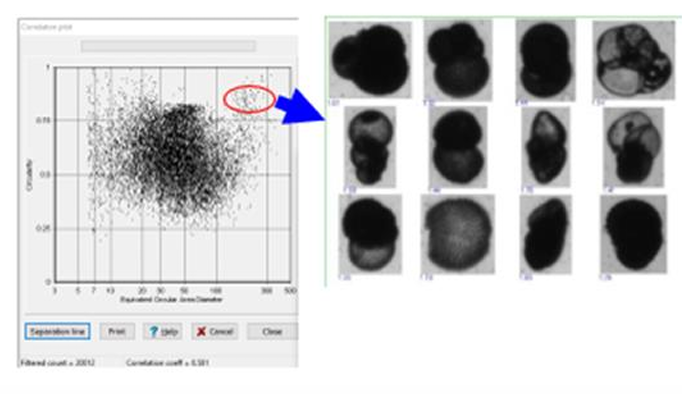

One very important feature of dynamic image analysis is the ability to view particles that make up a questionable statistical result. In the image below, size only instruments could render statistical histograms with questionable data without the ability to verify what the data is. Dynamic imaging allows the user to view the particles that make up any area of a size or shape distribution. This offers objective evidence to end users that is only available with dynamic image analysis.

Comparisons:

Dynamic imaging allows the end user to compare different lots of samples or different runs of analysis not only by size but also by shape. It is very possible that different samples may not show differences in just size but can have considerable differences in shape.

Correlation plots:

Correlation plots allow customers to have a view of all the analyzed particles with the goal of finding rare events. It would be nearly impossible to identify a particle or set of particles within an analysis of tens of thousands of particles if not for this correlation function. Correlation plots can be created with any two available shape measures.

Particle Classification:

The benefit of having more than 32 shape measurements for every particle enables the software to differentiate each particle from another much easier. When running a multi component sample the fact that every particle has 32 shape measures allows for particle classification. Particle classification is a feature that allows the software to separate and quantify the subpopulations of particles within a sample.

Dry and Wet Flow Capability – A Key Advantage

While many systems are limited to liquid-based flow imaging, Vision Analytical’s technology also supports dry particle flow analysis. This capability expands the range of applications to include powders, granules, and airborne particles — allowing you to characterize materials that competitors cannot measure in-flow.

To view this article an Azo Network, click here.

Click here to view Applications of Flow Imaging Microscopy

Click here to learn more about Flow Imaging Microscopy Products

Why Flow Imaging Microscopy is Powerful

Flow Imaging Microscopy combines the statistical robustness of automated image capture with the depth of morphological detail only imaging can provide. This makes it invaluable for applications where:

-

Visual verification is essential to confirm particle identity or source.

-

Size and shape both influence product performance or quality.

-

High throughput is required to analyze thousands of particles quickly.

-

Dry particle analysis offers unique insight into materials that must be analyzed in dry suspension.

Applications Across Industries

Flow Imaging Microscopy (Dynamic Image Analysis) is widely used in:

-

Biopharmaceuticals – Detecting protein aggregates and particulate matter in injectables.

-

Environmental Science – Monitoring microplastics and suspended solids in water.

-

Lubricants and Fuels – Characterizing wear debris and contamination.

-

Powder Materials – Measuring dry powders in manufacturing and research.

-

Food & Beverage – Ensuring quality in suspensions and emulsions.

FAQ Section

What is Flow Imaging Microscopy?

Flow Imaging Microscopy, also known as Dynamic Image Analysis, is a method for capturing high-resolution images of particles in motion — either in a liquid flow cell or in a dry suspension system. This allows for precise measurement of size, shape, and concentration, along with visual records for every particle.

Can Flow Imaging Microscopy measure dry particles?

Yes. While many systems are limited to liquid samples, Vision Analytical’s technology also supports dry particle flow analysis. This enables accurate characterization of powders, and granules — a capability that many competing systems do not offer.

Why choose Flow Imaging Microscopy over other particle analysis methods?

Unlike techniques that provide only size distribution, Flow Imaging Microscopy delivers both dimensional and morphological data, along with image thumbnails for visual verification. This makes it ideal for contamination analysis, quality control, and advanced research.

Quick Bullet Summary

At a Glance – Flow Imaging Microscopy with Vision Analytical:

-

Wet & Dry Capability – Analyze both liquid suspensions and free-flowing dry particles.

-

High-Speed Imaging – Thousands of particles measured in minutes.

-

Detailed Morphology – Size, shape, and visual images for every particle.

-

Broad Applications – From pharmaceuticals to environmental monitoring.

-

Proven Technology – Trusted by labs and manufacturers worldwide.